Synheart builds privacy-preserving human-state infrastructure that converts biosignals into structured emotional, cognitive, and behavioral state representations, computed locally and in real time.

No human state signal.

Human state becomes a first-class signal.

Your physiological signals reveal patterns that are hard to notice.

Synheart's Human State Interface (HSI) interprets stress, focus, calm, and behavioral dynamics locally, ethically, and in real time, without sending sensitive data to the cloud.

Built for developers, researchers, and human-centered products.

Private, On-Device Processing

End-to-End State Inference

Local Inference Reliability

For decades, computers have listened to our words and tracked our behavior. Now they can understand the subtle rhythm beneath both, your heartbeat. Synheart's Human State Interface unifies:

Synheart is more than a model — it's an ecosystem connecting biosignal data, emotional inference, cognitive metrics, and interactive analytics. </br> States are inferred from physiological and behavioral signals, not medical diagnoses.

Synheart is more than a model — it's an ecosystem connecting biosignal data, emotional inference, cognitive metrics, and interactive analytics. States are inferred from physiological and behavioral signals, not medical diagnoses.

Heart Rate

72 BPM

Stress Indicator

Elevated

SWIP score

88

Stress Exposure

86%

Focus Score

75

Behavioral Coverage

360°

Every heartbeat carries signals related to stress, focus, and calm.

Synheart interprets these signals locally and privately, enabling human-state–aware experiences without giving up privacy.

Privacy-first processing with zero cloud dependence.

HR, HRV, EDA, and motion combined for robust emotional and cognitive state estimation.

Benchmarked using peer-reviewed, public biosignal datasets.

Reliable accuracy for real-world human-state and focus applications.

Our work brings together cognitive neuroscience and biosignal modeling to understand how physiological patterns reflect human state.

We develop new HSI methods, open benchmarks, and collaborate with labs advancing human-state and cognitive research.

"We are not thinking machines that feel; we are feeling machines that think."

Antonio Damasio

HRV

We translate research into deployable human-state systems and tools.

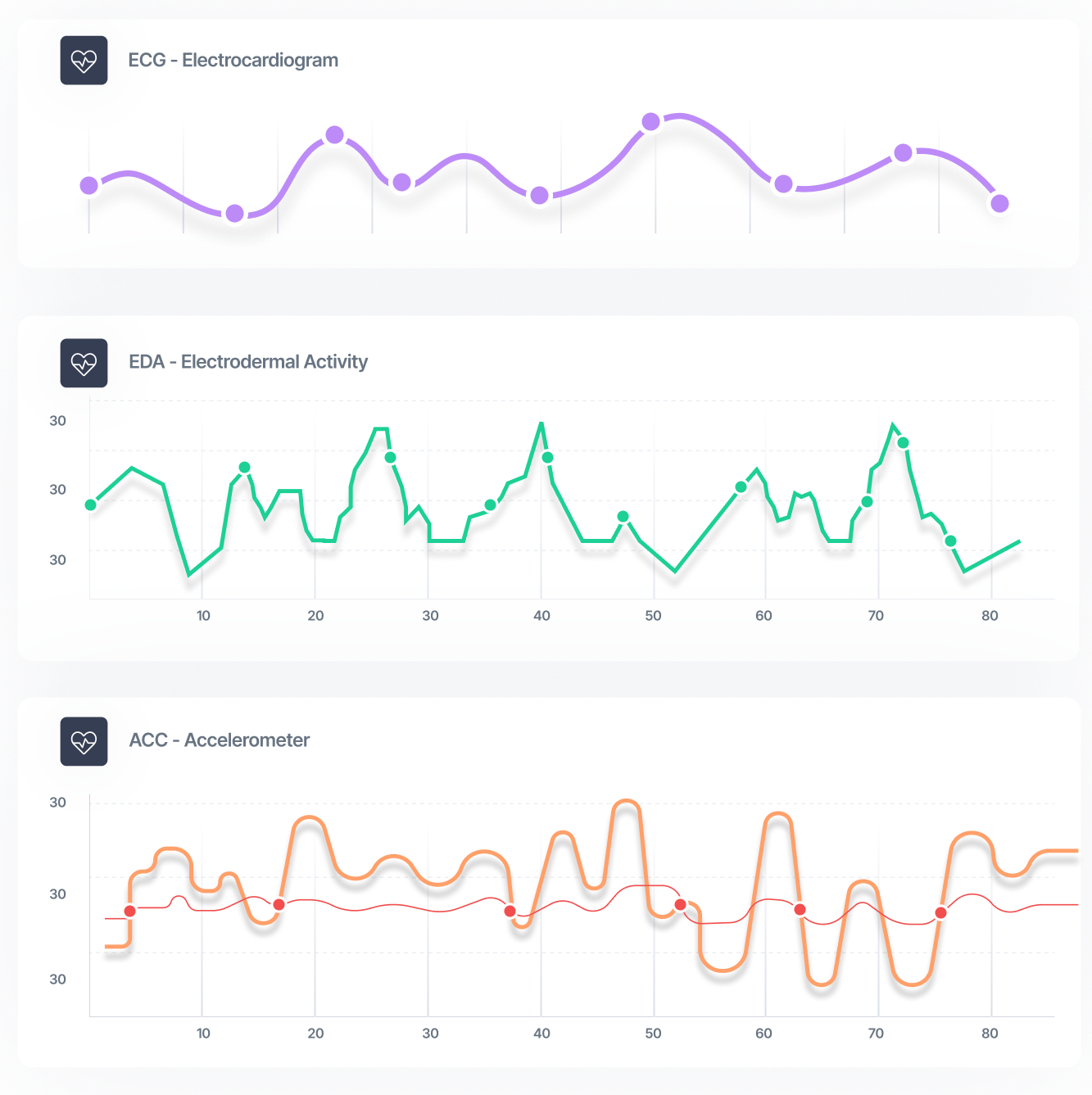

Our pipeline transforms raw physiological data into clean, interpretable signals using advanced filtering, artifact removal, and feature extraction—all optimized for edge computing with minimal computational overhead.

Electrocardiogram and photoplethysmography signals for heart rate and cardiovascular monitoring

Variability metrics for stress, recovery, and autonomic state

Skin conductance signals reflecting arousal and emotional intensity

Motion and micro-movement data for context and behavior

Physiological thermal signals for state assessment

Whether You're A Researcher, Developer, Or Designer — If You Believe

Human-State Belongs In Intelligent Systems, There's A Place For You Here.